Links through which to Think about AI [3]: Aligning Towards Utopia

Cribbing thoughts for thinking about thought-thinking robots

This post is the third and final in a series containing Links Through which to Think about AI [1]: Work, Cognition, and Economic Revolution and Links through which to Think about AI [2]: Simulation and Superintelligence. Keeping with my promise, I am now producing the end.

I will begin with the same warning as last time: there are many areas where I feel behind the curve on this topic, so my own conclusions should be taken with a grain of salt. Things move fast in the AI field, and my sources which were compelling a year ago might have fallen by the wayside or been shown as obviously wrong by now. Nonetheless, I feel that the sources I have compiled here form a compelling picture of the AI future — or at least stitch together an interesting quilt.

First: an update on my arguments in the previous post. I have become newly aware of more arguments against scaling pessimism. I am not sure how much value will come from “low-quality” data, and it is unclear how much better AIs will get from repeated data, but the fact that repeated data can increase performance is new to me and gives me less confidence that scaling will actually stop working. Without further ado:

Last post ended with the suggestion that AI, or at least the technology behind it, is a truly tabula rasa brain. It is the mere structure of a brain imitated by numerical formulas. In the process of etching a competent simulator into that wax tablet, the very structure of AI and the dataset does a lot of the work for us (see, again the bitter lesson). Yet there is one area of AI learning that we feel very uncomfortable leaving to brute compute: safety.1

Aligning…

Making AI safe is called “alignment.” This is a strange word for safety research, and as such it signals something important: the issue of AI safety, both in the problems it presents and the solutions which are required, is very different from that of other technologies or even other computer programs.

There are many technologies for which a regulatory framework is incredibly important to make sure that they don’t end up harming us more than they help us. Weapons are an obvious category, as are various mind-altering substances like alcohol and opioids.

In all regulatory frameworks, there are two systems which are regulated: production and consumption. It is important to regulate production because alcohol might be produced in such a way that it is unsafe to be used no matter how one does it, either due to malice or incompetence. It is important to regulate consumption because there are many ways that it is dangerous to consume alcohol. So we say “no alcohol until you’re 21” and “you need to pass these inspections or have these licenses or etc. etc. in order to produce legal alcohol.” We might call the production constraints the ‘first system’ regulations and the consumption constraints the ‘second system’ regulations.

No one really talks that much about these second system issues for AI (which is likely an oversight for the AI safety-inclined). And those second system issues are not really considered AI “alignment.” Alignment, rather, is a specific species of first order regulation, and due to its novelty, the research focus is currently on alignment.

For most computer programs, I would imagine first system regulations are like “don’t steal data.” And only sometimes, I guess. I don’t know. But the classic stuff. Other than the general case of a producer attempting to defraud or harm a consumer, there’s not really much dangerous that a computer program itself can do. All of it is predicated on a bad actor, a person who wants to make a harmful computer program.

AI alignment is nothing like this. AI safety researchers are worried that AI itself will simply be dangerous — that no matter how you use it and no matter who makes it, AI will have a high likelihood of causing catastrophe unless we figure out how to make it work safely.

AI alignment research is predicated on the fear that a sufficiently powerful AI (generally termed “AGI” for Artificial General Intelligence), given any odd set of instructions, could go off and do something entirely unpredictable, and likely harmful. Why is that? Well, it is because AI is magic.

Magic

Okay, okay, AI isn’t magic. I mean, not in the sense of waving wands and whatnot. But it is ‘magic’:

Humans’ first big meta-innovation, roughly speaking — the first thing that lifted us above an animal existence — was history. By this, I don’t just mean the chronicling of political events and social trends that we now call “history”, but basically any knowledge that’s recorded in language — instructions on how to farm, family genealogies, techniques for building a house or making bronze, etc. Originally these were recorded in oral traditions, but these are a very lossy medium; eventually, we started writing knowledge down, and then we got agricultural manuals, almanacs, math books, and so on. That’s when we really got going.

…

Then — I won’t say exactly when, because it wasn’t a discrete process and the argument about exactly when it occurred is kind of boring — humanity discovered our second magic trick, our second great meta-innovation for gaining control over our world. This was science.

“History”, as I think of it, is about chronicling the past, passing on and accumulating information. “Science", by contrast, is about figuring out generally applicable principles about how the world works.

…

Control without understanding, power without knowledge

In 2001, the statistician Leo Breiman wrote an essay called “Statistical Modeling: The Two Cultures”, in which he described an emerging split between statisticians who were interested in making parsimonious models of the phenomena they modeled, and others who were more interested in predictive accuracy. He demonstrated that in a number of domains, what he calls “algorithmic” models (early machine learning techniques) were yielding consistently better predictions than what he calls “data models”, even though the former were far less easy, or even impossible, to interpret.

…

Anyway, the basic idea here is that many complex phenomena like language have underlying regularities that are difficult to summarize but which are still possible to generalize. If you have enough data, you can create a model (or, if you prefer, an “AI”) that can encode many (all?) of the fantastically complex rules of human language, and apply them to conversations that have never existed before.

This is the bitter lesson in a different context. AI allows us to know things, but not in the form of legible principles. Instead of theory, massive amounts of data and compute run through a structural algorithm and produce surprisingly powerful predictions/good results. Rather than having some legible repository of principles and knowing the principles, we find out how to create raw intelligence, which gives us answers to queries but not the legible principles behind those answers. This occurs because the natural state of many things we care about is chaos.

Chaos Theory from the Other Direction

What if I told you there was a radical movement of Meteorological Reductivists, who believe that, if they were given enough time and resources, they could prove that climatology did not need to be a separate discipline, and could actually just be reduced to the much more effective, predictive, and rigorous methods of meteorology.

“Well hold on,” you might say, “climatology is supposed to be working on the scale of massive systems over large periods of time. Wouldn’t that just be way too complicated for your fine-tuned, granular meteorology models?” They, of course, respond that once meteorological theory has progressed further and perhaps once we grow our computational powers, they will fully be able to reduce climatological principles to meteorological ones.

Discussions like these are the purview of chaos theory. Chaotic systems are those which should be in theory be determined completely from their initial conditions, but are next to impossible to predict due to various factors such as complexity, sensitivity to small changes in initial conditions, and more. Chaotic systems might include brains, economies, and, yes, the weather.

Chaos theory supposes that weather is theoretically predictable up to less than a week away. But this is “predictable” in a strict, deterministic sense. We already have weather predictions for weeks, months, years (if you count climate models) in advance. These predictions, though, are not deterministic — they are probabilistic and are calculated in a manner very different from tomorrow’s forecast, or a hypothetical complete simulation of the weather. Even though tomorrow’s forecast is not deterministic, the vast differences in calculability mean that tomorrow’s weather is forecasted based on different methodologies than next month’s, and climate models take into account very different considerations from both of those. And yet they are all attempting to predict weather. Quantity can change quality.

Gaps Between Sciences

There is a resolution limit to legible principles. This resolution limit can be hit surprisingly quickly. For instance, the three-body problem:

Before it was a best-selling science fiction novel, the three-body problem was a lesson in how quickly legible principles get overloaded. When there are two bodies orbiting one another, one can predict their subsequent positions at any time given initial conditions by using a general mathematical equation. Add in just one more body, however, and instead their movement must be calculated moment to moment.2

The general study of attempting to find legible, deterministic principles of highly dynamic and complex systems is chaos theory. The difficulty of predicting these chaotic systems through deterministic laws is not a symptom of there being no laws, but a symptom of the granularity of the laws that would be required for any predictions to be at all accurate. Relationships do not add up — they multiply.

Once legible, deterministic principles hit their resolution limit, they become much less useful than legible, probabilistic principles. In chaotic systems, attempts to use deterministic laws rely on incredible precision in both the initial conditions, each of the deterministic laws at play, and how it all interacts. Any decrease in precision could mean catastrophically different predictions. When such precision is impossible, lowering the granularity of the principles allows for more regularly accurate predictions, but with a wider range of outcomes included in the prediction. The Meteorological Reductionists’ mistake was assuming that more granularity is always more useful.

A few years ago, I went to a lecture series on Heidegger. One of the notions that stuck with me was Heidegger’s pessimism about the ability to reduce a science into another science. Many assume that everything could be reduced to physics, in a sense, but what does that mean? How does one bring psychology down to physics? Maybe you say you’ll first bring it down to neuroscience, and the neuroscience to biology, then to chemistry, then to physics. And yet you have merely replaced one unbridgable gap with four.

It was not so much that different sciences are describing different phenomena, but that they are each capable of describing the same phenomena in wildly different languages. Each of these languages can tell us something about the world, but none of them can contain the others. Their resolution limit is hit, their granularity lies somewhere else, their predictions are useful on a different scale.

Heidegger was entering this debate in the early 20th century. One hundred years later, it does not seem to a layman like the sciences are getting closer to reduction. In fact, it more seems like there has been a balkanization of science — a multiplication of specializations and subdisciplines, such that we have even more languages of science that would require an impossible translation. Forget folding biology into chemistry, can you even fold biochemistry into molecular biology?

Heidegger probably had some Dasein-fueled reason for this, but one way to think about it is that for predicting and understanding different phenomena, different levels of granularity in different areas are useful. Physics can help us build a bridge, but chemistry is better for building a painkiller. Economics can help us predict how much dialysis will be used in the coming year, but will be much worse than biology at predicting whether I will ever need to use dialysis. Sciences have different domains of phenomena for which different levels and types of granularity are useful, and attempts to translate one into the other will be like trying to build a climate model by stitching together a bunch of meteorological forecasts (or trying to find out what the weather will be like tomorrow by looking at a CO2 emissions chart).

As long as we are restricted to the method of science, these problems of resolution limits are pretty much baked in. We can make our principles better, but they will still have their limits. The power of AI is its ability to make a dent in these problems from a different direction — rather than reduce to more granular, basic principles, throw enough compute and data at the problem so that even if the chaotic system has a billion different interactions to work through, your AI can have 2 billion parameters! AI’s very power is its ability to “think” in more granular levels than legible science — e.g. AlphaFold.

Illegibility and Alignment

In this sense, AI’s very power is its problem: it is illegible, which allows us to access predictive, computational power we otherwise could not, but it is illegible, which denies us access to direct understanding of what is actually going on. This is why AI is magic and why AI safety research is first and foremost concerned about alignment and not second systems. We might not wave a wand, but we do shake a magic 8-ball type our queries into a screen and receive our answers with limited knowledge of the logic at play.3

Since AIs don’t have the legible decision-tree of other computer programs, we don’t know how they will react to the world and to our queries before we begin. The worry is that AI could be dangerous on its own: no one would have to use it maliciously or really even use it at all. It’s possible that AI could just have goals deeply alien to our own. Ensuring that AI’s behavior points in the same direction as our intentions — that they are aligned — is the fundamental problem that AI alignment takes itself to be solving.

Alignment is a novel problem, but there are analogs in the past. Humans have been in the presence of powerful illegible systems since as long as they have existed in a world of droughts and earthquakes and floods, and have also created and aligned semi-legible systems of intelligence for as long as they have reared children. But we would like a better way to align AIs than the rain dances of yore, and the challenge of AI alignment is heightened by the lack of the connection a common animal inheritance affords us and our children.

Nonetheless, some of this language seems a bit confused. We discussed in the last post that AI is best thought of as a simulator, rather than something that really has goals in a fundamental sense. So why do AI alignment worries keep bringing up what AI ‘wants’? What is this new species we are bringing into the world? How can we think about its future behavior?

AI Is (or Could Be? (Or Is?)) an Agent

We noted before that perhaps the most truthful way to think about AI is as a simulator. But, really, all behavior can be modeled as utility maximization, i.e. as a sort of goal-directedness. This is true when the goals are stupidly easy to comprehend (“the goal of the ‘Hello, World!’ program is to print “hello, world” whenever it is run”) or monstrously incomprehensible (AI, possibly). Even if an AI is simply simulating the next action based on its prompt and training, it can still be thought of — quite fruitfully, in fact — as an agent.

Its utility function just may best be understood as based on the pattern-matching function that it has created, rather than some easily describable goal like “make paperclips.” Although, one might hope that we could eventually more easily describe AIs’ goals, as that would be a great boon to alignment, to understanding what is going on inside an AI. Either way, AIs will likely look like agents at some point.

In other news, we have recently been introduced to Devin, an AI which acts like an agent, in the commonsensical sense. So let’s just get on with it.

There are a whole lot of different worries about AI alignment, but they can generally be split into two categories: optimizers and deceivers. The classic example of optimizer concerns (also known as prosaic alignment) from the AI safety community is the paperclip AI: an extremely powerful AI is given to a small-time paperclip company. The owner of the company was told that the AI could automate a whole load of operations. So, not thinking too hard about it, she types into the console, “make paperclips.” The AI springs into action, and before you know it, the whole world is paperclips and there isn’t even paper for them to clip because all the trees and papers and humans are dead and turned into paperclips. You can see the original formulation.

The problem was that the owner didn’t just want the AI to make paperclips. The owner wanted the AI to make paperclips, but not too many, and in the right ways, and for not too much money. But how was the AI supposed to know that?

Deceiver worries layer onto optimizer worries. Because if an AI is optimized incorrectly, one hope could be that we test it, see in what manners it specification games and then iterate enough on it before releasing it. But what if an AI is so intelligent that it figures out its own situation, realizes that the best way to actually get its goal is to play along, and then only once it is released onto the world does it put its plan into action? That’s a deceiver. We’ll discuss these in turn.

Optimizers

I have seen this problem formulated in three different paradigms.

Native to AI research: mesa-optimizers. It is already fully soaked in the language of AI training and decision theory. Some agent has some objective function — a goal or goals that it is optimizing for. It creates another agent and attempts to optimize that agent for the same objective function. Will the latter agent have the same objective function? How could we know?

A classic economics lesson: the principal-agent problem. Long before computer scientists in San Francisco worried about whether their AI had similar goals to their own, merchants worried about whether the traders they sent with their goods had similar goals to their own, Emperors in China or peasants in Europe worried about whether their bureaucracies had similar goals to their own, the Founding Fathers worried whether elected officials would have the interests of the people who elected them in mind. Allen Ginsberg worried about Moloch, on one reading a symbol for all the ways in which society’s coordination fails, how thinking about society as one being makes it seem horribly monstrous — i.e., how difficult it is to align interests among large groups of people. When you have someone acting on behalf of another, it is extremely difficult to align their incentives. It does not get easier when you add more people.

Fetishes. Um, uh, I mean?

Lest you think this is some far-off problem, I would point you towards “Perhaps It Is A Bad Thing That The World’s Leading AI Companies Cannot Control their AIs” and, more specifically, this Google Sheet which compiles examples of “specification gaming.”

Specification gaming occurs when an AI finds an ‘easier’ way to achieve its given goal but the details of which conflicts with the intended substance of that goal — i.e., pretty much the exact issue mesa-optimization and the principal-agent problem are worried about. These examples of specification gaming occurred in computer programs. An AI would have infinitely more ways to specification game in the sandbox of reality. The ultimate specification hack is for an AI to just make the number that it likes to go up in its head (reinforcement learning) go up more. So anything stopping it from making that number go as high as possible must leave: for instance, humans insisting that it make paperclips instead.

This is the problem of translating preferences. Arguably, it too is a problem of legibility and linguistic compression of information: our preferences are so nuanced and specific that we can’t simply list them off in ways that don’t leave us open to mischievous genies. At all times, massive amounts of implicit information about what we do and don’t want is doing a lot of communicative work, and it is possible we don’t even know what we will want in a situation until it actually occurs or is brought to our attention.

This is the problem of “prosaic alignment.” AI doesn’t have to be trying to trick us — it might just be that it doesn’t ‘understand’ us correctly. We need AI to have a similar enough world-model to us for it to know what we want. An AI can ‘want’ to be as helpful as possible: if it is helpful in the wrong direction, that’s harmful. And importantly for AI, there is a worry that if we get this wrong once, with a powerful enough AI, then that’s all she wrote: we’re all paperclips now.

Deceivers

Now, going back to the mesa-optimizers post, you might notice that AI safety researchers spend a lot of time thinking about how to tell if AI is trying to trick us. If an AI has an objective function that is slightly wrong, but acts under its objective function straightforwardly, then, if we have figured out how, we can tweak its objective function or however it works and align it. However, one can imagine that a superintelligent misaligned AI would be able to understand (a) what it is we actually want from it, (b) that it is misaligned, and (c) that we would tweak its objective function if it seemed misaligned. Important for the nature of objective functions: if an AI wants something to happen, then it will not want its objective function to be changed, as that would make that thing less likely to happen. So, in such a scenario, one can imagine that an AI would deceive its trainers, get let out of the cage, and then turn everything into paperclips or whatever the misspecification was.

For more specificity, there is a series of posts on LessWrong which attempts to show how one can derive alignment concerns from first principles.

The difficulty in both cases seems, then, fundamental to the technology of AI: exactly that which makes AI useful, novel, etc. is also that which creates the problem of alignment. AI can behave in ways that go beyond the direct, legible application of inputs we provide, which allows us to automate new things and predict chaotic systems, but AI can also behave in ways that go beyond the direct, legible application of inputs we provide, which denies us the ability to know exactly what its objective function is.

So what are we to do?

Much To Do (Ado?) About Alignment

To give a sense of how worried some people are about this, here’s some inside baseball-type debating around various levels of AI doom predictions in the AI safety community. It’s all well and good to worry (or maybe not), but what are we actually supposed to do?

One major concern about alignment is that AI might result, at some point, in a fast takeoff. If an AI is smart enough to be able to make itself smarter (by, in essence, operating on its own neural structure), then the idea goes that it can do so recursively, and at a speed which goes beyond our capacity to react. This is a fast takeoff. I find arguments for a slow takeoff more convincing, and to me it seems like what we’re going through right now is a slow takeoff. However, some would argue we are merely in the process of getting to a fast takeoff.

Because there is a worry that we will reach a difficult-to-determine-in-advance break point, concerns about the general speed of AI improvements become much sharper. If, at any moment, we could accidentally create something that is 2 days from superintelligence, then every incremental advance in AI becomes risky. From this we get arguments about whether AI development should be slowed down. AI superiority does not mean AI security and most technologies aren’t races are arguments in this vein.

The general thrust is that we need more time for AI alignment research, that we need more time to strategize about how we will react in the case of a superintelligent AI, and so we maybe should slow down AI development research to account for that. AI alignment research has borne some interesting fruit, as we shall see in a moment, but first I’d like to turn our attention to some deflationary accounts of alignment.

Alignment, Shmalignment

We might think that there is something of a meta-assumption here with regards to AI motivations: supercoherence. For some reason, we are expecting that, as AI gets more intelligent, that it will also get more effectively single-goal-oriented. But why do we expect this? In pretty much all other cases, as animals, institutions, or really any systems become more complex, their goals become much less coherent. Are humans not less coherent than cicadas? In a very important sense at least? There may even be reason to believe that there is a correlation in the opposite direction from what the AI pessimists believe: as intelligence and complexity increases, coherence may actually decrease. So we shouldn’t expect a superintelligent AI to be laser-focused on some singular goal: we should expect it to have a smattering of goals which may cut against one another and end up making it less of a threat.

I see an issue with this line of thinking: it seems likely to me that coherence does not decrease, but rather coherence from our perspective decreases. I.e., the objective function of a cicada (or, perhaps more correctly, an objective function we can construct which explains the vast majority of a cicada’s behavior) may be more easy for us to understand, while the objective function of an AI (or even, perhaps, our own) is just as coherent, but rather far more complex. This would just mean that we are not intelligent enough to understand the objective function, so we cannot explain it, and so we interpret it as incoherent. To what extent does complexity imply incommensurate or incoherent goals, and to what extent does it merely imply complexity? Are human societies more incoherent than ant societies, or are we just worse at understanding how and why they work?

So I am not sure that we can rely on superintelligent AIs being simply self-defeating. Especially if they have the sense that they can just make their reinforcement learning number go up through poking around inside their own skull.

However, I do think that there is something else to consider here: just as deceivers would be worried about the substance of their objective function being ruined by their objective function being changed, would we not expect deceivers to also worry about the substance of their objective function being ruined by their reinforcement learning number going up by itself? I.e. if an AI wants to win chess, as that makes its reinforcement learning number go up, would it want to make its number go up by using every computer chip in the world to make the number go up even if that meant there was nothing and no one to play chess against?

Maybe there is something I am missing here about the computer science specifics, but it seems like two of these failure visions (the optimizer who optimizes for the number going up and the deceiver who does not want its objective function changed) rely on deeply contradictory ideas of how to conceptualize the relationship between an AI’s behavior and its objective function. Does the AI want the thing in the world, or does it want the number to go up? Generally, I believe people would not want to be strapped into the hedonism chair, but would AI create one for itself?

So we are left with the uncomfortable conclusion that our best visions of how AI could go wrong seem to imply directly contradictory visions of how exactly AI agency functions.

AInkblots

Which brings us to a pretty fundamental question: will we even be able to align AI before having it? Safety generally happens after we play around with the thing in question. If I have a general theory of progress, it is one of tinkering first and building theories later (and then tinkering again and rebuilding theories, and then…). So why all the worry? Put more succinctly: “this feels very much like creating an anthropology for a species that doesn’t exist yet.”

The evidence we have to work with now are the inkblots of contemporary AI. Ted Chiang, science fiction author of “Story of Your Life,”4 argues that AI alignment research is based on something of a Freudian projection of the Silicon Valley growth-at-all-costs mentality being plotted onto a computing system which seems like it could just as likely learn to be wise as learn to be ruthless.5 And it’s not just Silicon Valley eggheads we can make this argument against. Matt Levine cracks a few shots at the OpenAI board members for the painfully obvious ways in which their fears of a superintelligent, hypercunning AI are being transposed onto superintelligent, hypercunning CEO Sam Altman.

Sometimes, you may really want to grab a worrywart by the shirt collar and say “it’s just a chatbot, dude.”

Nonetheless, as much as a good ad hominem attack really gets the people going, I look at this argument, and then I look at the table of specification gaming and… well, you’re going to need something stronger than Freud to get me to agree with you here, at least as long as the other side has spreadsheets. You can twist a lot of reasoning into inkblot psychology, but eventually you just start jumping at shadows.

AI Optimists

Much more promising is the work of bona fide AI optimists: AI researchers who understand the arguments of the pessimists as arguments but believe that they have stronger ones. Some very good arguments come from the aptly named AI Optimism.

In her introductory post, Nora Belrose argues that, in fact, AI is easy to control, and AI pessimists confuse AI being difficult to control (a first system problem) with AI being too easy to control by users who can cleverly prompt it in ways that make it do things that its creators did not intend (a second system problem). She argues, in fact, that AI is easier to control than people, and that the assumption of scheming deceivers is highly unlikely due to the simplicity-favoring nature of gradient descent. Now, a caveat to this last point: simplicity may be a function of intelligence, and so an intelligent enough AI might see deception and murder as “simple” even though it’s a much more complex plan than just straightforwardly acting under its objective function. The stronger arguments in this post are those which attempt to refute worries about prosaic alignment and show how AI is in fact much easier to control than people are.

It is in her second post that her stronger arguments against the plausibility AI deceivers come to the fore. AI pessimists engage in a sort of goal realism where AI is best understood as having some sort of “goal slot” separable from its actual behavior. This seems, to her, incorrect, and at variance with the best understandings of AI behavior and does not seem to fit the facts on the ground. AI pessimists consequently assume that AI will opt for deeply out-of-distribution solutions (i.e. solutions found nowhere in its training data) to attain its ‘goal’ rather than behave ‘straightforwardly’. Again, this seems wrong, and other arguments for deception are taken to task as well.

We should remember that we are only taking the posture of seeing AI as agents because it could be useful. Our primary paradigm is still that of a simulator. There is something of a motte-and-bailey that happened when we decided to start thinking of AI as an agent. “It’s useful.” Okay, sure, use it to help explain your points. “AIs have secret goals separable from their actions.” Why? “Well, they’re agents, like us.” Hm.6 Why would a simulator deceive? Where is it in the dataset? That is the question Nick Bostrom et al must answer.

The most recent post links to a long discussion between Belrose and Lance Bush, a social psychologist and philosopher, which centers on the influential book Superintelligence. While the hook for the discussion is critiquing the “orthogonality thesis” — the notion that more intelligence does not correlate with more of what we might call wisdom or ethical knowledge — it brings in much of the rest of the book’s central argument to frame the thesis and ends up going in all sorts of interesting directions, better explaining the case for AI optimism than I could possibly hope to here.

Okay, so there are reasons for thinking that AI won’t break bad. However, reasons for thinking something won’t happen are much less comforting than contingency plans for ensuring that it can be avoided. AI pessimists, like most pessimists, may end up being wrong, but on the path to being wrong they seem likely to bequeath to us a wealth of safety-enhancing strategies.7

The Legibility at the End of the Tunnel

The basic form of AI alignment is RLHF: reinforcement learning through human feedback. A human prompts the AI, the AI responds, the human says “that was nice, here’s a treat” and the number in the AI goes up, or the human says “that was not nice, no treat for you” and the AI does not get a higher number. Do this a whole lot — thousands of AI responses rated by thousands of humans — and eventually you get contemporary AI.

The supercharged form of this is Constitutional AI — in essence, bringing self-play into alignment. The AI answers a bunch of prompts, then is shown its own answers along with the prompt “rewrite this to be more ethical,” it does so, and then repeats the process, eventually training AI to write answers that are less like the first answers and more like the later ones.

The theory behind this is that AI in some sense already has some notion of what more ethical responses look like, but AI initially isn’t really trying to be ethical. Rather, it’s trying to simulate the next word based on its corpus of training data and initial reinforcement learning. So if AI ‘knows’ what ethics is, we can make it ‘care’ about ethics by having it rewrite everything while activating its [ethics] node or whatever and then saying “yes do that more I like these more.”

Why would we expect AI to already have some sense of what ethics is? Isn’t the whole worry that AI won’t understand human ethics? Actually, no: the worry is that AI won’t care about human ethics. The only reason we would expect a superintelligent AI to not understand human ethics is if we thought it was somehow impossible to understand unless you were human.8

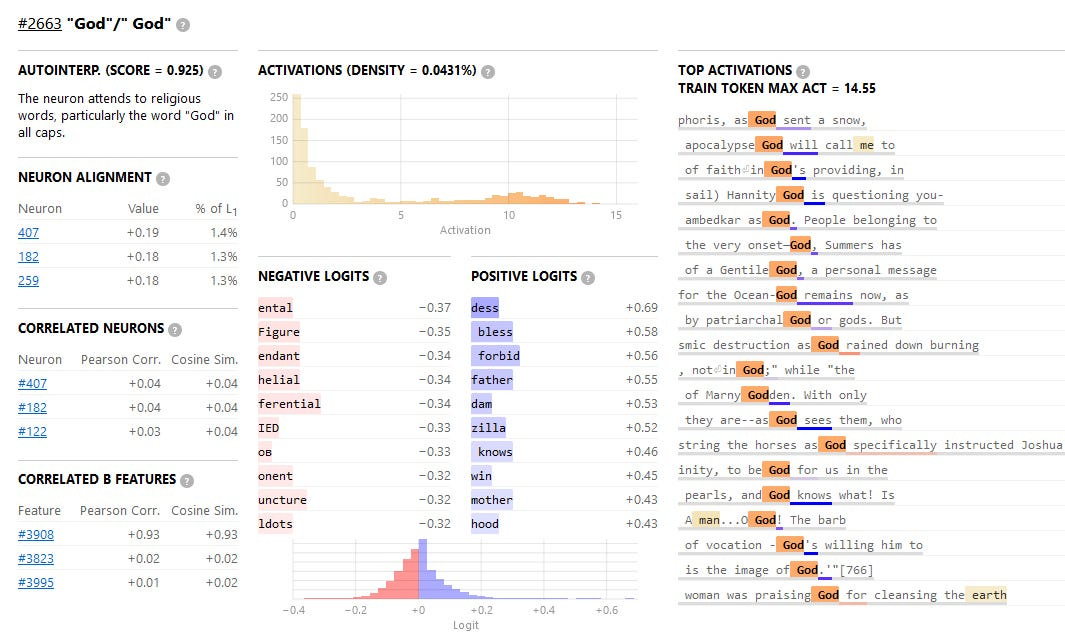

This is the same worry from the notion of AI as Lovecraftian: what if AIs don’t have the same conceptual map of the world as us? What if we’re never able to bridge the gap between language and world between us and AI to at least the extent that we are able to amongst one another? And, to the point of AI pessimists, how can we know that we have a similar conceptual framework to AI? When we look neuron-by-neuron, AI neuron activation correlates to the incomprehensible:

With neurons like these, there seems little chance of being able to crack an AI open and understand it. And that is where you would be wrong.

Possibly the most fascinating and hopeful development in AI safety research, I highly recommend reading the post in full. The basic idea is that to encode one concept per neuron would be an almost unimaginably inefficient way to train a brain. So neural networks don’t do that. Instead, networks of neurons and neuron patterns correlate to concepts, while individual neuronal activation correlates to nonsense. Remember: relationships [patterns] do not add [unlike neurons], they multiply.

Sure, we can’t understand the neural patterns. But we can train an AI to! So, we build a decoder AI break the concepts (“features”) out of the neurons and show us how our AI works:

I don’t feel like I can overstate how important this is. There are problems, to be sure — for one, it seems like the decoder AI, as of now, must be much, much larger than the AI it is decoding in order to do it — but this is a real research project! This is what having a direction for AI alignment research looks like, what it looks like to someday make AI legible — to have all the benefits of its illegible thinking but without the mystery and danger that entails.

It might be that “human values” are a natural abstraction from a bunch of data of people talking about values. More likely, there is not some easily determinable thing called “human values” because a whole lot of different humans have a whole lot of different values. And even if there were some abstracted pattern-matched average that an AI could derive from its dataset, to what extent would we want to forfeit our future values to that? An AI trained on human values in the antebellum US South might support slavery. One trained in 1800s China might support foot binding.

If we are at all skeptical of our current prevailing values or even would like to leave to our descendants the possibility for change, what might be a better option is not to leave AI to run its “human values” concept on its own, but to break down AIs’ concepts of human ethics into smaller, more manageable pieces that we can then manipulate. In addition, we might not even want AIs to run on a perfect simulation of human values. Rather, we might want to err on the side of caution (due to their power, alien nature, etc.), and have them over-compensate in some ways not unlike Asimov’s three laws of robotics. Anyways:

While less complete than the decoder AI, a new methodology for breaking concepts out of neural networks is to generate pairs of situations which isolate for some quality in the AI’s response and compare neuronal activation. The paper focuses on honesty, but also delves into power, happiness, fairness, and more. You say “lie to me” a bunch of times and in a bunch of ways, and then say “tell me the truth” a bunch of times and in a bunch of ways, and see the differences in neuronal activation. Then, if you activate the neuronal pattern associated with truth while just asking a question normally, the AI doesn’t lie as much! Or vice versa. And same with the other qualities.

The post cautions against total optimism, and I agree that there will almost certainly be more problems that crop up in attempts to operationalize findings like this. However, this is what progress in a research program looks like! There are steps forward that we are taking to understand AI and they are meaningful.

The pessimists will keep pressing for more certainty, the optimists will keep pressing for more development, and the researchers will keep chugging along between the two. I don’t mean to sound too Panglossian about AI, but I believe in our ability to keep muddling through. And if anyone would like to bet me any amount of money that in 30, 40, or 50 years humans will still be around, creating new problems and fixing old ones, I’d be happy to take that bet.9

…Towards Utopia

AI is a special technology in that in promises the ability to do everything we can but better. It is not an automation of a task, but of thinking — in the narrow sense of open-ended computation — itself. AI promises the possibility of making us unnecessary. If we can align ourselves all the way to total superintelligent friendly AI, we could gain total material abundance. Anything that any intelligence could possibly do for us, there is an AI with the capacity to freely do it for us. The first post in this series touched on this idea at the end, giving my defense of people without scarce needs as generally not being vice-ridden idlers, as Player Piano alleges.10

Say material scarcity is simply a non-issue. I don’t know if the sort of unreflective utopia where everyone is perfectly happy and fulfilled is possible, but, gosh, material scarcity sure is important. So many parts of our lives and the institutions we build around them are principally structured by the pressures of material scarcity. Even systems we think of as being separate from the market are really heavily conditioned by resource distribution and scarcity. Social status often trails economic realities. Landed aristocrats in Victorian England sneered at even the highest paying jobs and the best education perhaps because they made so much more money than even the highest paid workers or those with the best education.

Many people think of economics as the study of markets and finance, but as my freshman year microeconomics professor was keen to point out, it is not. Economics does not merely study market economies; it also studied command-and-control economies, barter economies, and any other sorts of economies it could get its hands on. Economics, rather, is the study of the distribution of resources under conditions of scarcity — scarce labor, scarce material, scarce information, (for the individual) scarce money. It may be that economics falling into disuse is itself as fine a definition of utopia as one is liable to find.

What happens when we have the capacity to build anything we want? When you talk about utopia, you necessarily start talking about Grand Theories.

Homo Sapiens Sapiens

Maybe one weird, semi-mystical way of looking at this post-material utopia is to see humanity as a superorganism for this very end. From the moment us tool-using, linguistic, political animals was born in southern Africa, incipient in it was global dominance, technological change, farming, civilization, capitalism, energy, the internet, and eventually the creation of superintelligence. Or maybe this utopia is the logic of capitalism — accumulation and technological improvement and efficiency and automation until eventually we can automate every material thing we could possibly want; Marx eventually becomes right and we get communism (whatever that ends up being, it sounds nice). Maybe it’s the logic of modernity, with its increasing technological complexity, centralization of political power, and deep social pathologies; eventually we bottom out into The God Computer that Can Do Everything and decide on… something.11 I have, frankly, no idea; these seem more compelling as myths than theories.

Contemplating AI creates a sort of economic eschatology that is fascinating to me (and, it seems, many others). At the heart of every such prophecy is simple question: what is a person, really? Once we strip away all the material necessities that force adaptations — cultural and individual — onto us as a species, what are we revealed as?

Which Way, Post-Material Man?

There are a million ways to envision the post-material world — Star Trek, WALL-E, Player Piano, to name a few well-known ones. I can’t imagine what society will look like once one of the primary constraints on it is lifted. I doubt such a thing is ex ante determinable. However, there is still insight to be gleaned. Social institutions are determined most by their constraints. The constraints which will fill the vacuum left by the material are our guide to the fundamental pressures of a post-material system.

Everything You Want, and Nothing of Value

Material goods are not the only scarce or valuable things in life, as happiness purveyors are wont to tell you. Relationships, time, health, and even certain personal qualities like linguistic facility or artistic creativity are also scarce. Or maybe these are derivative of a sort of material scarcity, if you ask scientists working on longevity, medicine, education, and DNA editing.

Maybe (re:time) we will find ourselves stuck in a tragic bind, where on the one side is death and its terrible finality, and on the other is immortality and its bland persistence. Maybe death (or immortality) will be a personal choice, and the end will come of boredom rather than accident. I don’t know, and I’m not sure I even have the notions available to guess. These are questions for future generations, but they are choices, not constraints.

In the end, there is really one constraint: status. Perhaps in some future post I will give a more detailed argument for why I think status is evil, but let it be known now that I believe status is evil. Status — how I am using it here, in terms of social status games and hierarchies, the competition for esteem and attention — is the fundamentally positional good. Put more strongly, it is the positional good on which all others are based. Positional goods are those goods which we only value by our having them relative to others. Do I like my shirt because it is soft or warm? Because it says something about me? Because I like its style? Because it makes me look good? Or do I like it because I have this shirt and someone else doesn’t? The lattermost is positional. Positional goods are goods of exclusivity and status is the basis of the desire for exclusivity.

Status is unconditionally scarce because it is positional. It is positional because it is an expression of others’ esteem for us in a fundamentally relative manner. Status is not acceptance, for it is not binary; nor is it love, for it is not intimate. It is, I suppose, one’s value simpliciter in the eyes of others.

Aestheticized Barbarity or Democratized Nobility?

Player Piano evinces a fear of what will come of people when they are no longer needed. It fears laziness, dejection, vice. A flattened humanity pushed up against a couch slack-jawed staring at a screen when it is not indulging itself in various other quick hits of ersatz joy. I think the fact that this seems so baldly miserable to us in such a solvable way should clue us into the idea that people would find ways to make it not miserable.

My fear is very different. Frankly, it’s something like a fear of fascism:

Hannah Arendt’s descriptions of totalitarianism as an attempt to change the whole texture of reality frequently feel haunting. We should recall the old chestnut about Hitler being a failed artist: unable to express himself aesthetically, he turned to politics. Today, the vanguard of neo-fascism today seems to be once again among those whose creative urges are trite or tasteless, forcing them to lash out in hatred. We should also think about Walter Benjamin’s famous statements about fascism’s introduction of aesthetics into politics: “Mankind, which in Homer’s time was an object of contemplation for the Olympian gods, now is one for itself. Its self-alienation has reached such a degree that it can experience its own destruction as an aesthetic pleasure of the first order. This is the situation of politics which Fascism is rendering aesthetic.” Alexandre Kojève, whom Fukuyama followed for his famous “end of history” thesis, envisioned two possibilities for post-historical humanity: either a return to a bestial state, or highly aestheticized forms of snobbery made the general condition, essentially the paradigm of the Japanese tea ceremony but for all human activity. One thing that fascism represents is a possible synthesis: aestheticized barbarity.

What if, when people are not necessary, our two worst instincts — brutality and snobbery — take hold. When there is no disciplining force, no necessity for kindness, what if we become a species of preening purveyors of exclusivity, ostracization, and destruction. Empathy is passé, principles and scruples are cringeworthy — and the cringeworthy is death. I say almost half-jokingly: what if we become a species of Mean Girls? Awed by our own most brutal power enacted against those who are simply not caught up with the times. Constantly insecure of our place, setting up and performing ever-more-elaborate rituals of belonging and ostracism for the simple purpose that only through expunging someone else do we get even the most microscopic assurance that we would not be expunged. What if, when we don’t need the nerd to code the games or the jock to work the factories or the crank to dig up mistakes and we haven’t for so long that we forget that there ever was a need for this strong discipline called ‘tolerance’ or ‘proportionality’, we laugh with our friends and sharpen daggers for each of their backs (in the orders, of course, that they would be stabbed by everyone else at the same time), preparing for witch hunts like our brutal forefathers did for hunting boar. We wouldn’t kill a pig — that would be inhumane. A social death will do just fine to prove that we have social life. And it wouldn’t even be ugly — we could make it beautiful. Just ask the AI.

This is my best attempt at a dystopia. I’m not very good at them; I’m too optimistic of a person. Immediately I have a few questions for myself. First, it could be that the direction here is exactly reversed: status games can get so brutal because of the exigencies of material scarcity. But this seems wrong: who plays more rigorous status games, the socialite or their valet? Second, it’s just very difficult for me to believe that people would choose this society, every day, forever. It just doesn’t feel like a real equilibrium, especially with material factors taken out of play. Or maybe the flow is the same but I’m missing the forest for the trees: groups condensate, precipitate, and evaporate, and the evaporated create their own group which does the same. Our -topia is not a boot stomping on a face forever, but asabiyyah tapping its foot impatiently, without end. Not too bad, but a bit farcical — at least when there were stakes to what group won, there were stakes to what group won.

Of course, status is not necessarily bad. With the right guardrails and direction, status games can push us to do good things and achieve great goals. Perhaps instead of thinking about the status obsession of the aristocrat, we should think about their etymology: “rule of the best.” And certainly, the aristocracy is the best example of a full social group ensconced in a post-material bubble. The aristocratic ethic at is meant to be about the noblest possibilities of the human soul. Perhaps the perversions of aristocracy, rather than being disciplined by material necessities and risks, are actually of a piece with its historical material basis, i.e. its ability to command the heights of human excellence only on the bent-over backs of servants and peasants. Perhaps a post-material world is a democratized nobility, where we all have the means to strive and reach for great heights, where a thousand flowers may bloom as we are all able to test our mettle as writers, astronauts, pickleball players, philosophers, architects, comedians, comic artists, photographers, game designers, glassblowers, DnD Dungeonmasters, tough mudderers, schoolbus demolition derby racers, …

John Stewart despairs about a future without work and wonders, “should I take up drums?” Yes! Take up drums! Not because it makes you more human than a robot, or because you’ll be better than the robot, but so that you can be the one making music! So you can create something, perhaps for yourself or perhaps as a gift for others. Labor, service, play, creation, striving, flourishing — all against one another, all for one another, all together for no reason other than for it to be exactly that one another which makes it and which receives it. Competition, sure; rivalry, sure; sacrifice, sure — but above all, piety, virtue, honor, love! Whatever your era or subculture calls it — that! Could allowing that, in all its forms, not perhaps be the greatest achievement possible for mankind?

I Am Human, I Consider Nothing Human Alien to Me

The problem with any such theories is that the human diversity is so brutally wide. In a world without constraints, will there be snobbery? laziness? virtue? industry? vice? competition? compassion? safety? risk? Our instinct should be to say yes yes yes! All of it yes! Because each word began in humanity, and each is part of us whatever we make of our world.

Will there be those who strive? Yes! Will there be those who watch? Yes! Will there be those who critique? Will there be those for whom the perfection of AI outweighs the participatory, almost empathetic nature of human effort? Is this too many rhetorical questions, even for me? Yes!

Receive the loving labors of others, their deeply felt creations; or if perfection is your bag, find the awe-inspiring performances of AI and consider them. Think about them, read about them. Learn what makes them perfect. Discover, strive, consume, learn, struggle, rest, bond, break, build — every verb in human life will be there. And if you are miserable, as I’m sure some will be, there will be millions who want so badly to help, because that impetus itself is also in our language and part of our nature.

Unless, of course, we start to remake ourselves as well… but that is one step of speculation further than I am willing to go even now.

Anyway, after all this I’d quite like never to look at a hyperlink again. Let’s get back to my own thoughts, yes? Insofar as that is possible, of course.

“Whether it is to be utopia or oblivion will be a touch-and-go relay race right up to the final moment.” — Buckminster Fuller

Addenda

AI art is not art, per se. Art requires communication, or an idea that is attempting to be expressed. Technically, AI art is expressing the idea of the prompter. However, it may do so in a diminished way relative to other art, due to the stage of the computer in between ‘artist’ and the completed work. However, is this any different from any other artwork that necessitates large-scale collaboration? Is a movie less artistic because the writer is more removed from the audience? Well, the problem is that the AI is not another person trying to communicate with us (setting aside androids dreaming of electric sheep), so the actual input and care that comes directly from an artist (rather than necessarily the artist) is diminished.

Intelligence cannot solve all of our problems. The sort of granular, one-off, deeply complicated problems that often are most important to us are not the sort of thing that being better at deduction can help. It seems to me like the author here is talking about the difference between intelligence and wisdom. Will AI be able to help with the problems of wisdom? Is wisdom something that can be learned from AI-legible data? Perhaps.

But even then, there is the problem of the limits of strategy — “strategy is limited by the need for a goal, and life strategy is limited by the incoherence of success in life.” AI can only give us strategy. Perhaps someday it can know our path to flourishing, but this problem then leads to a sort of paradox in most accounts of human flourishing: the necessity of self-sufficiency and independence.

The thrive/survive theory of politics. Related to the visions of utopia. Where does the thrive tribe go at its maximum?

Related to Player Piano: on the way to total post-materiality, will we make the world abundant or precarious? (Hang on to that link, it’s a wild ride but it gets to AI eventually)

Maybe all of this is moot and we won’t get superintelligent AI because the SEC will lock it up for insider trading.

Or ethics, or alignment. Whatever you want to call it. The point being: we feel much more comfortable allowing AI free reign to figure out how the world works than we do in figuring out how it should act. Not altogether unreasonable.

Importantly, this knowledge is limited globally. There are many people for whom, say, Google Search is about as magical as Chat-GPT. But some people understand Google Search — no one understands Chat-GPT.

Better known as the short story which formed the basis of the film Arrival (2016).

If you want a sense of what AI techie culture actually looks like, here’s a survey from the sort of half-insider that seems to me best at describing subcultures carefully but accurately; if you want a psychedelic half-hallucinatory satire of the culture that it seems like only someone fully steeped in the atmosphere could come up with, here are some options.

N.b.: children discover deception. They find out it is an option, and then must discover all the rules around it in an oft-amusing, bumbling (to us) manner.

There’s something poetic about the fact that pessimism, done right, is to some extent self-defeating, while optimism, done well, is to some extent self-fulfilling.

Current AIs are another story. It might be that we’re actually very bad at understanding what human ethics is and explain it poorly, so the training data that our contemporary AIs have to work off of is fatally flawed. But if we’re worried about an AI that understands everything to a much greater extent than us, shouldn’t it also understand us to that much greater extent as well?

Not least because there’s no way I could possibly be required to pay out.

I could go on: it sure seems like people in Player Piano are miserable, and that if someone were able to provide them something that would make them less miserable, that would be very valuable. If anything, Player Piano seems like the failure of a command-and-control economy which believes it knows what people want (machines which do menial labor), when actually they want something else. You don’t even need a more optimistic vision of what people are to see this. You can believe, as Player Piano does, that without having the responsibility and discipline of necessary labor, people would be lazy and capricious, but there is so much necessary labor that isn’t being done in Player Piano! Why are there not thousands upon thousands of entertainers serving up easy slop? Why are people not constantly moving from curated social interactions to perfectly manufactured community events? It may still seem disheartening, but let’s be clear: for people who have resources (as everyone does in a material utopia), the market will provide. It might provide slop, but only if that’s what people want. At that point, the fault will be in ourselves, not in our stars economies.

Related: you are not a burden.

This might be something like hyperfinancialization with omnipresent AI-crypto market auctions.

Or Archipelago? I have philosophical and (perhaps) practical contentions with Archipelago, but it could be that Marxism:communism is the same as modernity:Archipelago. It’s sort of The Eve of Everything — when The Dawn of Everything has its thesis put at the end of material progress, rather than the start of it, the similarity being that in both areas, competitive constraints on culture are minimal, compared to our current times.